DEA - Dexterous Eye-guided Aiming [ENG]

by Tullio Facchinetti

DEA is composed by a tracking device with two degrees of freedom, designed to orient a laser pointer on a moving target and to follow its trajectory by vision. A pneumatic shooting device is used to launch a plastic sphere on the target to verify the correctness of the prediction. This system can be useful whenever an object needs to be not only tracked, but also catched in some manner, like in dynamic grasping operations. The approach can also be useful when the exact location of a target in some future time needs to be estimated from its current trajectory, as done in astronomical applications for tracking heavenly bodies.

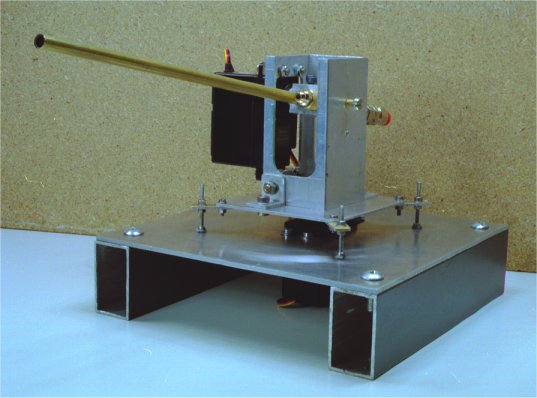

The visual tracking system consists of a two-degrees-of-freedom device driven by two independent servomotors. The servo motors are controlled by a PIC-based board, connected to the PC through the standard RS232 serial port. A 20cm blowpipe, with a laser pointer aligned with its axis, can be rotated to track a moving target and thus to follow its trajectory. A pneumatic valve attached to the pipe is used to launch a plastic sphere on the target in order to verify the correctness of the prediction. A single fixed camera is used to monitor the target position. This solution does not allow to evaluate the distance of the target, so we assume that the target moves in a vertical plane with known distance.

A complete description of the system is provided in the master degree thesis [Italian only].

System calibration

The coordinate conversion from the image space to the actuation space is performed by a multi-layer neural network, trained using the back propagation algorithm during a calibration phase. The use of a neural network allows avoiding (i) the complexity of an optical distortion modelling of the camera lens and (ii) the three dimensional modelling of the physical environment containing both robot and target motion plane. The first advantage (i) makes the system independent from the lens optical parameters, so that the lens can be freely changed and the system re-calibrated by an automated training phase. The second advantage (ii) regards the relative positions of the robot and the target motion plane position and orientation: they can be automatically updated by an off-line network re-calibration.

The neural network is trained by an automatic calibration procedure, which generates the training set by moving the motors in such a way that the laser spot is positioned in different points of the visual field. For each spot position, the system records the coordinates in the image plane and the corresponding servomotors control values. Once trained, the network is able to associate a pair of motor coordinates to each point in the visual field, so driving the motors in the image plane.

Visual tracking

During tracking, image processing is performed at frame rate (50 Hz) by performing a simple threshold operation. Both the laser spot and the target are extracted from the background just on the basis of the difference in the pixel grey levels. Once an object is isolated, its position is detected by computing its center of mass. To reduce the scanning area, the moving target is searched in a small window centered in its predicted position, rather than in the entire visual field. If the target is not found in the predicted area, the search is performed in a larger region until, eventually, the entire visual field is scanned in the worst case. If the system is well designed, the target is found very quickly in the predicted area most of the times. However, in rare situations (corresponding to abrupt trajectory changes), a larger area need to be scanned, causing the task execution time to increase substantially (computation time increases quadratically as a function of the number of trials).

Target positions are estimated through a Kalman filter, which uses the past trajectory coordinates to provide the target position at a desired future time. In this way, the blowpipe can point at the correct direction and shoot at the correct time.

Kalman filtering theory requires a physical model of the target motion. The model we used is based on the material point kinematics equations, and generalizes up to systems with uniform acceleration, using a third order differential equations system. More accurate and reliable estimators could be obtained if a specific knowledge is available on the motion features, but they would be less general and effective in the presence of noise, or when the trajectory differs from the expected one.

Software

The software developed for the tracking device involves three main phases: a manual calibration, consisting in parameter tuning and scan window sizing; an automatic calibration, consisting in the training set generation and the neural network training; and the visual tracking phase.

The application was developed in a modular fashion, in order to allow the execution of the different phases only if necessary. The calibration must be done whenever the environmental conditions change or when the robot is moved from its position. Once the calibration is performed, the tracking step can be executed for several times maintaining the same setting. Moreover, in the case of variations of certain parameters, the system can re-calibrate them only. For instance, if the brightness level suddenly changes in the target motion environment, i.e. turning the light on and off in a room, then the image processing threshold values can simply be reset independently of the other parameters.

Results

The two following videos refer to a target having a diameter of 3 cm, placed at the distance of 3 meters.