132 private links

Hi, I am Noctie, a human-like digital chess AI! Play against me and I'll try to match your skill level and estimate your rating.

In recent years, large-scale transformer-based language models have become the pinnacle of neural networks used in NLP tasks. They grow in scale and complexity every month, but training such models requires millions of dollars, the best experts, and years of development. That’s why only major IT companies have access to this state-of-the-art technology. However, researchers and developers all over the world need access to these solutions. Without new research, their growth could wane. The only way to avoid this is by sharing best practices with the developer community.

We’ve been using YaLM family of language models in our Alice voice assistant and Yandex Search for more than a year now.

GPT-3, or Generative Pre-trained Transformer 3, is a piece of AI from the OpenAI group that takes text from the user, and writes a lot more for them.

And, freaking heck am I am impressed at what folks have managed to build around the GPT-3 technology.

The modern project of creating human-like artificial intelligence (AI) started after World War II, when it was discovered that electronic computers are not just number-crunching machines, but can also manipulate symbols. It is possible to pursue this goal without assuming that machine intelligence is identical to human intelligence. This is known as weak AI. However, many AI researcher have pursued the aim of developing artificial intelligence that is in principle identical to human intelligence, called strong AI. Weak AI is less ambitious than strong AI, and therefore less controversial. However, there are important controversies related to weak AI as well. This paper focuses on the distinction between artificial general intelligence (AGI) and artificial narrow intelligence (ANI). Although AGI may be classified as weak AI, it is close to strong AI because one chief characteristics of human intelligence is its generality. Although AGI is less ambitious than strong AI, there were critics almost from the very beginning. One of the leading critics was the philosopher Hubert Dreyfus, who argued that computers, who have no body, no childhood and no cultural practice, could not acquire intelligence at all. One of Dreyfus’ main arguments was that human knowledge is partly tacit, and therefore cannot be articulated and incorporated in a computer program. However, today one might argue that new approaches to artificial intelligence research have made his arguments obsolete. Deep learning and Big Data are among the latest approaches, and advocates argue that they will be able to realize AGI. A closer look reveals that although development of artificial intelligence for specific purposes (ANI) has been impressive, we have not come much closer to developing artificial general intelligence (AGI). The article further argues that this is in principle impossible, and it revives Hubert Dreyfus’ argument that computers are not in the world.

Google's DeepMind has just released a new academic paper on AlphaZero -- the general purpose artificial intelligence system that mastered chess through self-play and went on to defeat the world champion of chess engines, Stockfish. In this video chess International Master Anna Rudolf takes a look at a never-before-seen game from a match played in January 2018, and discusses how the playing style and attacking chess of AlphaZero compare to computers and humans.

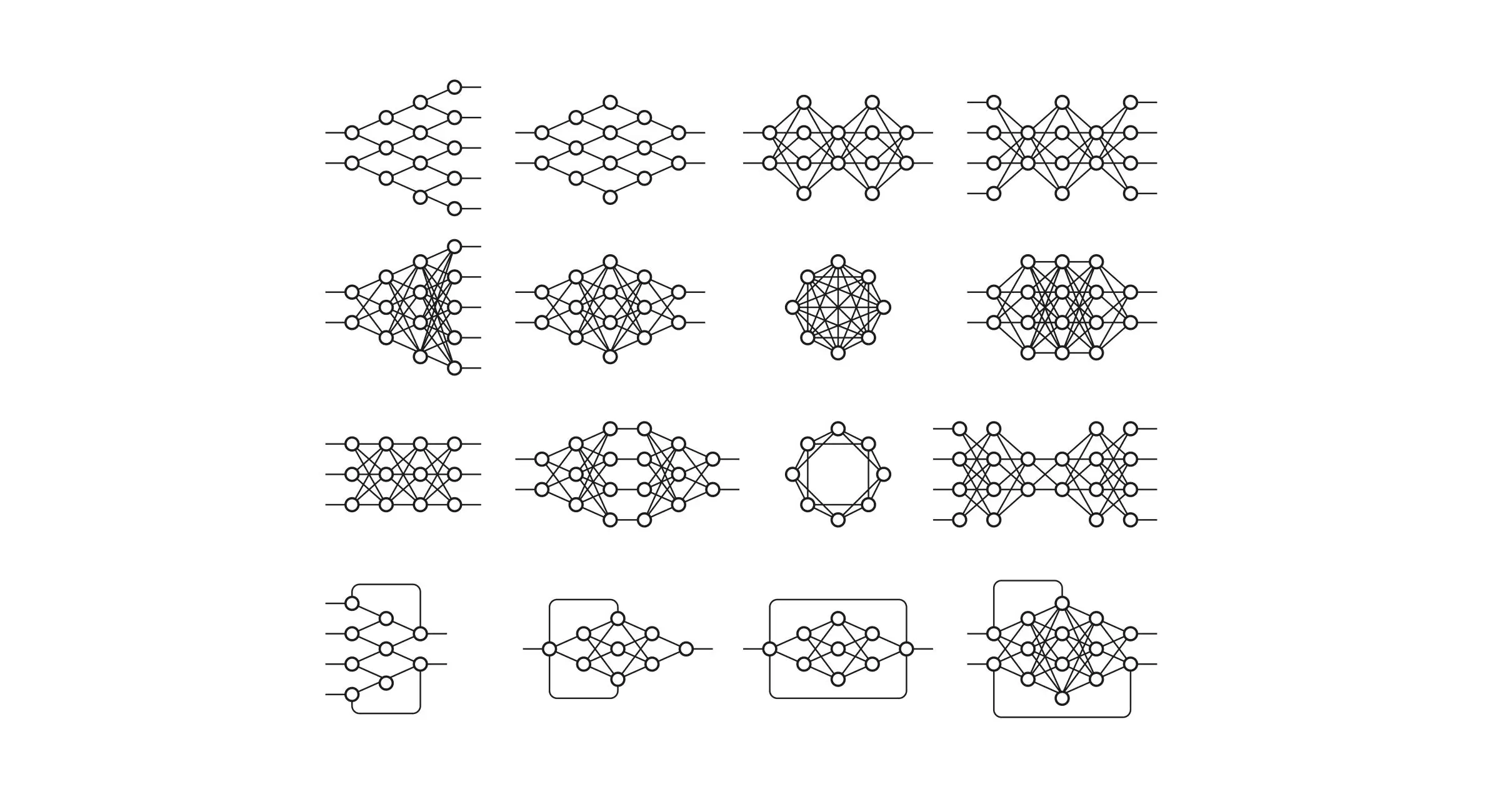

All the essential Deep Learning Algorithms you need to know including models used in Computer Vision and Natural Language Processing.

We are releasing HiPlot, a lightweight interactive visualization tool to help AI researchers discover correlations and patterns in high-dimensional data.

In this task we will try our first approach at training a conversational model.

Clearview AI devised a groundbreaking facial recognition app. You take a picture of a person, upload it and get to see public photos of that person, along with links to where those photos appeared. The system — whose backbone is a database of more than three billion images that Clearview claims to have scraped from Facebook, YouTube, Venmo and millions of other websites — goes far beyond anything ever constructed by the United States government or Silicon Valley giants.

Facebook AI has developed the first neural network that uses symbolic reasoning to solve advanced mathematics problems.

Federal public comment websites currently are unable to detect Deepfake Text once submitted. I created a computer program (a bot) that generated and submitted 1,001 deepfake comments regarding a Medicaid reform waiver to a federal public comment website, stopping submission when these comments comprised more than half of all submitted comments. I then formally withdrew the bot comments.

Artificial intelligence bots have achieved superhuman results in zero-sum games such as chess, Go, and poker, in which each player tries to defeat the others. However, just like humans, real-world AI systems must learn to coordinate in cooperative environments as well.

To advance research on AI that can understand other points of view and collaborate effectively, Facebook AI has developed a bot that sets a new state of the art in Hanabi, a card game in which all players work together. Our bot not only improves upon previous AI systems but also exceeds the performance of elite human players, as judged by experienced players who have evaluated it.

This article discusses GPT-2 and BERT models, as well using knowledge distillation to create highly accurate models with fewer parameters than their teachers

What should worry us most about artificial intelligence: losing our jobs to cheaper labor or losing our lives to killer robots? The real threat may lie in yet another danger: losing our minds.

AI research is making great strides toward its long-term goal of human-level or superhuman intelligent machines. If it succeeds in its current form, however, that could well be catastrophic for the human race. The reason is that the “standard model” of AI requires machines to pursue a fixed objective specified by humans.

We are unable to specify the objective completely and correctly, nor can we anticipate or prevent the harms that machines pursuing an incorrect objective will create when operating on a global scale with superhuman capabilities. Already, we see examples such as social-media algorithms that learn to optimize click-through by manipulating human preferences, with disastrous consequences for democratic systems.

A new release from OpenAI shows how complex behavior emerges.

This week, leading AI lab OpenAI released their latest project: an AI that can play hide-and-seek. It’s the latest example of how, with current machine learning techniques, a very simple setup can produce shockingly sophisticated results.

Fake Text uses AI to analyze text and then generate incredibly detailed and realistic written responses to it, giving the impression that an exchange between humans is taking place. The AI analyses text patterns to put together disturbingly lucid text, typified by this Reddit thread.

Launched by leading global AI research lab OpenAI, Fake Text is already recognized as so potentially dangerous that even its inventors have publicly warned about it.

A toy project started to see how well a simple LSTM model can autocomplete python code.

It gives quite decent results by saving above 30% key strokes in most files, and close to 50% in some. We calculated key strokes saved by making a single (best) prediction and selecting it with a single key.

We do a beam search to find predictions, upto ~10 characters ahead. So far it's too inefficient, if you are wondering about editor integration.